Generative AI — systems that can create text, images, code, designs, and even strategy-level suggestions — has moved from novelty to everyday tool in record time. What began as research prototypes and demos now sits on many employees’ desktops and phones, quietly changing what work gets done, who does it, and how organizations design roles. The result isn’t a single outcome (mass layoffs or utopia) but a complex reshaping of tasks, skill mixes, career paths, and workplace expectations.

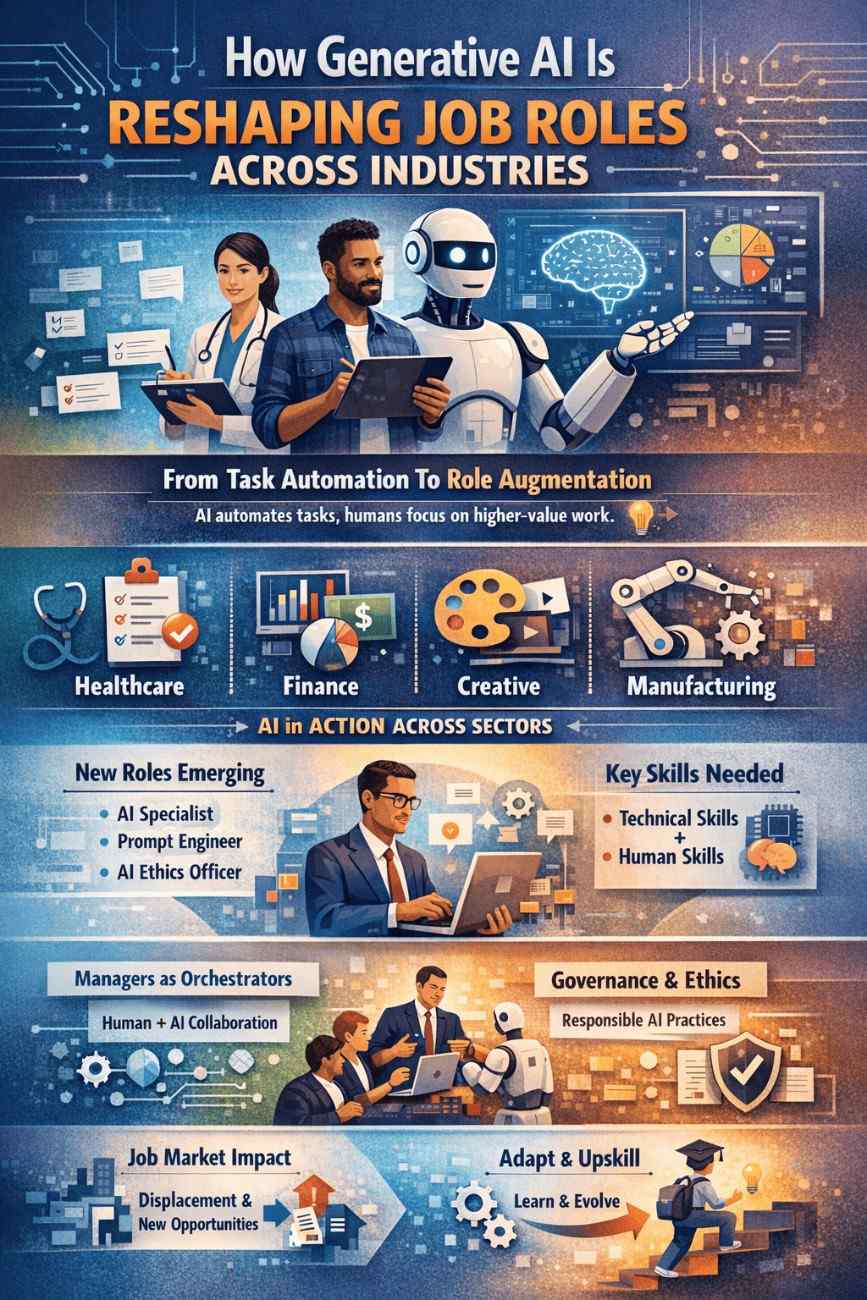

From task automation to role augmentation

A helpful way to think about generative AI’s effect is task-level, not job-level. Instead of replacing whole jobs overnight, GenAI often automates or accelerates specific activities inside jobs — drafting reports, summarizing meetings, generating marketing copy, producing first-pass code, or suggesting designs. That means many roles are being augmented: workers accomplish more, faster, and often at higher quality, while spending less time on repetitive or formulaic parts of their work. Over time, the percentage of each role that’s automatable or augmentable rises, prompting a rethink of where humans add the most value.

Industry snapshots: where changes are most visible

-

Healthcare: Clinicians and administrators see GenAI used for note-taking, draft discharge summaries, triaging patient questions, and synthesizing medical literature — freeing clinicians to focus on diagnosis and patient interaction. Yet governance, privacy, and accuracy checks add new responsibilities like AI-validation and documentation.

-

Financial services: Risk models, customer service chatbots, automated compliance checks, and rapid generation of investment narratives mean analysts and compliance officers now supervise AI outputs, interpret results for stakeholders, and focus on scenario planning and judgment calls that models can’t make reliably.

-

Creative industries: Designers, writers, and video producers increasingly use GenAI to create first drafts, mood boards, or storyboards. Creative professionals reallocate effort toward curation, concept refinement, and brand voice — roles that require critical taste and cultural insight.

-

Manufacturing and operations: AI-driven scheduling, predictive maintenance, and documentation generation raise the bar for operational roles: technicians must now interface with AI tools, validate predictive signals, and implement preventive actions. This creates higher-skilled maintenance and data-literate operational jobs.

These snapshots show a common pattern: machines take on routine generation and synthesis; humans retain (and deepen) roles requiring judgment, stakeholder trust, and cross-domain thinking.

New roles, renamed roles, and shifting titles

As tools grow more capable, organizations aren’t just changing job descriptions — they’re inventing new functions. Expect to see more roles like "AI product manager," "prompt engineer," "AI governance lead," and "human-AI interaction designer." Some incumbents will have their titles and responsibilities updated to reflect widespread AI use; large firms are already reorganizing titles and structures to align with AI-powered workflows. This signals that companies view AI not as a temporary tool but as a structural capability that requires its own career ladders.

What skills will matter most

The rising demand is twofold. First, technical skills: prompt engineering, model evaluation, basic model fine-tuning, data curation, and an understanding of model limitations. Second, and equally important, human skills: critical thinking, ethical judgment, communication, cross-functional collaboration, and domain expertise that lets workers interpret and apply AI outputs appropriately. Multiple studies show that while a large share of tasks can be automated, the human skills that surround those tasks become more valuable — and often scarcer. Employers are investing heavily in reskilling and internal training to bridge this gap.

Managers become orchestrators and gatekeepers

Generative AI also shifts managerial work. Instead of just assigning tasks and checking outputs, managers increasingly design workflows where humans and AI complement one another. They must decide which decisions an AI can autonomously support, where human approval is needed, how to measure quality of AI-augmented work, and how to keep teams motivated when routine work changes. Managers are becoming orchestrators of hybrid human-AI teams and stewards of responsible AI use.

Organizational implications: structure, governance, and ethics

Widespread GenAI adoption brings organizational consequences. Companies need new governance structures to oversee model risk, fairness, and privacy. They must build guardrails — human-in-the-loop checkpoints, audit trails, and clear ownership of AI outputs. They also face cultural challenges: employees worry about job security and fairness while leaders see productivity potential. Transparent communication, participatory design of AI tools, and visible upskilling programs help ease transitions and improve adoption.

The labor market: displacement, creation, and transition

Predictions vary, but a consistent finding in recent research is that generative AI will both displace certain tasks and create new roles and industries. Some routine jobs may shrink; at the same time, demand will grow for AI-literate specialists and for jobs that supervise, validate, and translate AI work into business value. Transition support—retraining, lateral moves, and social safety nets—will determine whether this shift is traumatic or a source of career mobility. Employers, educators, and policymakers each have a role in smoothing the transition.

Practical advice for workers and leaders

For professionals: get comfortable using GenAI as part of daily work, build complementary human skills (communication, ethics, domain depth), and pursue micro-credentials or hands-on projects that demonstrate AI fluency.

For leaders: treat GenAI as a capability rollout, not a one-off tool. Invest in change management, explicit governance, and measurement. Redesign roles around outcomes rather than tasks, and create clear career pathways for employees who take on new AI-related responsibilities.

For educators and policymakers: expand access to lifelong-learning, incentivize employer-led reskilling, and update occupational standards to reflect hybrid human-AI workflows.

Conclusion: reshaping, not replacing

Generative AI is neither a job terminator nor a universal job-creator — it’s a powerful reshaper. By automating generation and synthesis, it reassigns human effort toward higher-value activities: judgment, relationship-building, strategic thinking, and ethical oversight. The net effect on careers will depend on how quickly organizations invest in reskilling, how equitably transitions are managed, and how thoughtfully leaders redesign jobs for a hybrid human-AI future. Those who treat GenAI as a partner and a capability to build — rather than a threat to ignore — will be best positioned to capture its upside while protecting their people. |